Dynamic Power Management for Edge AI: A Sustainable Self-Adaptive Approach

Förderjahr 2024 / Stipendium Call #19 / Stipendien ID: 7383

Edge AI enables real-time model training, inference, and decision-making across various fields, including agriculture, medicine, and Industry 4.0. Unlike centralized cloud computing, Edge AI processes data closer to users, offering benefits such as reduced latency, improved data privacy, and lower costs. However, implementing Edge AI systems sustainably presents unique challenges. These systems often rely on unreliable power sources like solar panels, where energy availability fluctuates due to factors like weather and time of day. With limited battery reserves, maintaining application Quality of Service (QoS) while optimizing energy consumption becomes a critical task.

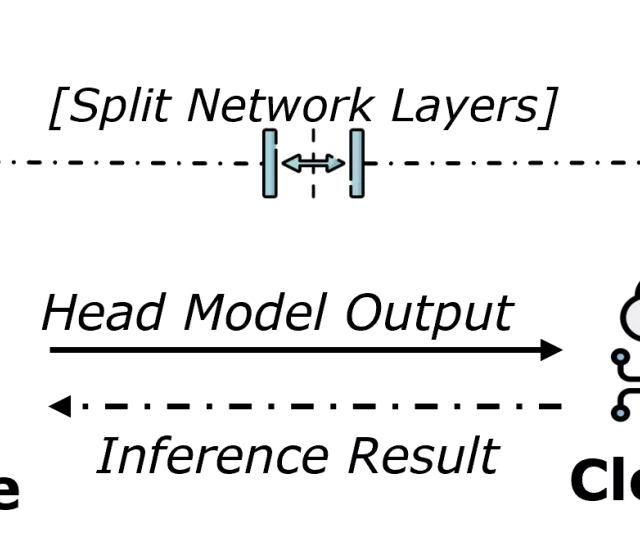

Dynamic power management strategies are necessary but challenging to implement due to diverse hardware architectures, heterogeneous AI models, and varying workloads. Traditional rule-based approaches are often inadequate for managing this complexity. To address these limitations, this thesis proposes the development of a Reinforcement Learning (RL)-based framework to enable intelligent, energy-aware adaptation for Edge AI applications.

The framework aims to optimize system behavior dynamically by adjusting resource usage and operational parameters in response to real-time power availability. Through an RL component, the framework will continuously improve its decision-making process. As a proof of concept, the framework will be applied to an energy-aware object detection use case on a test bed.

This thesis aims to contribute to the field of Edge AI by providing a sustainable power management solution, demonstrating how RL can enable efficient use of renewable energy sources while ensuring consistent system performance.

Uni | FH [Universität]

Themengebiet

Technologie

verwendete Open Source SW

Projektergebnisse

Interim Report for the master thesis "Dynamic Power Management for Edge AI: A sustainable self-adaptive Approch"