Neural network splitting for energy-efficient Edge-AI

Förderjahr 2024 / Stipendium Call #19 / Stipendien ID: 7170

My thesis focuses on developing DynaSplit, a framework designed to optimize energy efficiency and latency for deploying deep neural networks in edge-cloud environments. The growing demand for intelligent applications on edge devices, such as IoT sensors and smartphones, poses significant challenges due to limited computational resources, energy constraints, and strict latency requirements.

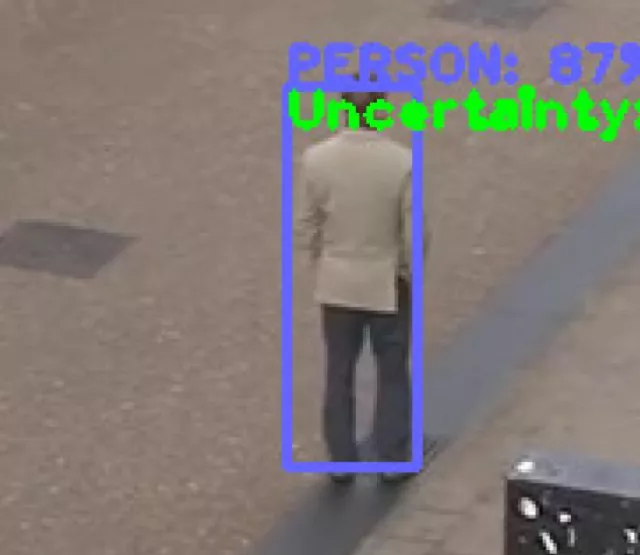

DynaSplit addresses these challenges by jointly optimizing the split point of neural networks - deciding which layers run on edge devices versus the cloud - and hardware parameters such as CPU frequency and accelerator utilization. This dual optimization enables efficient resource utilization while ensuring performance meets Quality of Service (QoS) requirements.

The framework employs a two-phase methodology: an Offline Phase that uses multi-objective optimization to identify Pareto-optimal configurations, and an Online Phase that dynamically selects the best configuration for incoming inference requests. Experimental results on real-world AI models show that DynaSplit can reduce energy consumption by up to 72% compared to cloud-only solutions, while meeting 90% of user-defined latency constraints.

By enabling efficient and sustainable deployment of AI models in resource-constrained environments, DynaSplit demonstrates the potential of combining advanced optimization techniques with the flexibility of split computing.

Uni | FH [Universität]

Themengebiet

Zielgruppe

Gesamtklassifikation

Technologie

verwendete Open Source SW

Lizenz