Förderjahr 2024 / Stipendium Call #19 / ProjektID: 7170 / Projekt: Neural network splitting for energy-efficient Edge-AI

How can AI models run efficiently on edge devices? Discover how DynaSplit performed in tests of energy efficiency, latency, and scalability.

Introduction: Bridging the AI Efficiency Gap

Deploying artificial intelligence (AI) on edge devices is a balancing act. Edge devices, such as IoT sensors or smartphones, often have limited computational power and energy resources. On the other hand, relying solely on the cloud for processing can introduce unacceptable delays for real-time applications.

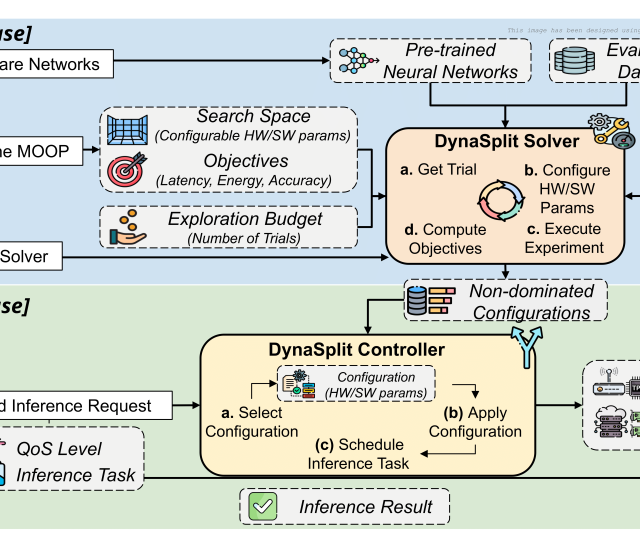

This is where DynaSplit comes in. By dynamically splitting neural networks between edge and cloud and optimizing hardware parameters, the framework promises to balance energy consumption, latency, and scalability. In this post, we’ll explore the results of DynaSplit’s evaluation and reflect on its real-world potential, limitations, and next steps.

Evaluating DynaSplit: The Setup

The evaluation was conducted using two key experiments to test DynaSplit’s capabilities under realistic conditions:

- Testbed Experiment: Focused on 50 user requests per network, assessing energy consumption, latency, and Quality of Service (QoS) adherence for each configuration.

- Simulation Experiment: Tested scalability with up to 10,000 requests using pre-recorded metrics from the testbed.

The experiments used two AI models: VGG16 and Vision Transformer (ViT). These were deployed in hybrid edge-cloud setups to replicate diverse workloads and scenarios.

Key Findings: What the Tests Showed

- Energy Efficiency: DynaSplit achieved energy savings of up to 72%, particularly for VGG16, where the median energy consumption per inference was under 3 J. This is comparable to edge-only configurations, making it a sustainable choice for energy-sensitive applications.

- Latency Goals: Around 90% of latency constraints were met across workloads, ensuring suitability for real-time applications like video analytics and IoT monitoring.

- Scalability: In simulations with 10,000 requests, DynaSplit maintained consistent performance, proving its ability to handle large-scale deployments.

- Accuracy Preservation: Accuracy differences across configurations were minimal, with less than 1% variation, confirming the framework's reliability for AI inference.

These results demonstrate that DynaSplit effectively combines energy efficiency and latency adherence while maintaining inference quality.

Implications: Why These Results Matter

The evaluation highlights several real-world benefits of DynaSplit:

- Sustainability: Energy-efficient AI frameworks like DynaSplit can contribute to reducing the carbon footprint of AI deployments, aligning with global sustainability goals.

- Dynamic Adaptability: Its ability to optimize across varying workloads ensures that DynaSplit remains effective in dynamic and resource-constrained environments.

- Versatile Applications: With its balance of latency and energy, DynaSplit has potential in applications ranging from healthcare monitoring to smart cities and autonomous vehicles.

Addressing Challenges and Limitations

Despite its strengths, the evaluation also revealed some challenges:

- Hardware Dependency: DynaSplit’s performance can vary based on the capabilities of edge devices. For example, ViT required cloud configurations due to memory constraints.

- Re-Optimization Needs: Significant hardware or model changes require offline re-optimization, which can be time-consuming.

- Network Dependency: Reliable connectivity is crucial for split configurations, making network conditions a key factor in its success.

Acknowledging these limitations is crucial for refining and expanding the framework.

Future Directions: The Path Ahead

DynaSplit’s evaluation is just the beginning. Future research could focus on:

- Resource Sharing: Exploring multi-user scenarios to enhance resource allocation across shared infrastructures.

- Serverless Environments: Testing DynaSplit in serverless setups to address latency from cloud cold starts.

- Fine-Grained Splitting: Investigating more granular split configurations for larger models and diverse hardware setups.

By tackling these areas, DynaSplit could unlock even greater efficiency and applicability.

Conclusion

DynaSplit showcases how dynamic neural network splitting and hardware optimization can address the challenges of energy efficiency, latency, and scalability in edge-cloud AI systems. The framework’s evaluation highlights its potential to transform real-world applications, from IoT to industrial AI.

As we look to the future, refining DynaSplit and exploring new use cases will pave the way for more sustainable and efficient AI deployments. Stay tuned as this journey continues!