Förderjahr 2023 / Stipendien Call #18 / ProjektID: 6801 / Projekt: Communication and Energy Efficient Edge Artificial Intelligence Framework for Internet of Things

We introduce a framework called FedCD, which allows heterogeneous clients to design and train their own models fitting their needs while still benefiting from the accumulated knowledge from the federated clients in a privacy-preserving manner.

Federated learning (FL) has garnered the remarkable ability to collaboratively train machine learning models while preserving the privacy of the clients. One of the many challenges faced by FL entails heterogeneity that appears in all aspects of the learning process. Each participating client is assumed to possess different networking and computational power, which results in system heterogeneity which renders a number of devices unable to train the model. FedCD is a communication-efficient FL framework that allows clients to train their personalized model using knowledge distillation.

Many applications, particularly in business-facing settings, could benefit from each client having their own unique model well suited to a particular client's data and resources. For example, in cross-silo applications where several organizations collaborate without sharing their private data, it may be more desirable and beneficial for them to train their personalized model that fits their needs and distinct specifications. Furthermore, AI as a service model demands more specialization. Imagine a typical AI vendor of, e.g., virtual shopping assistants that may have dozens of clients. Each client model is distinct and solves a peculiar task. In a conventional setting, each client model is trained only on the data at its disposal. It would be highly beneficial if clients trained on their local data could transfer their acquired knowledge to other clients without sharing their private data or model.

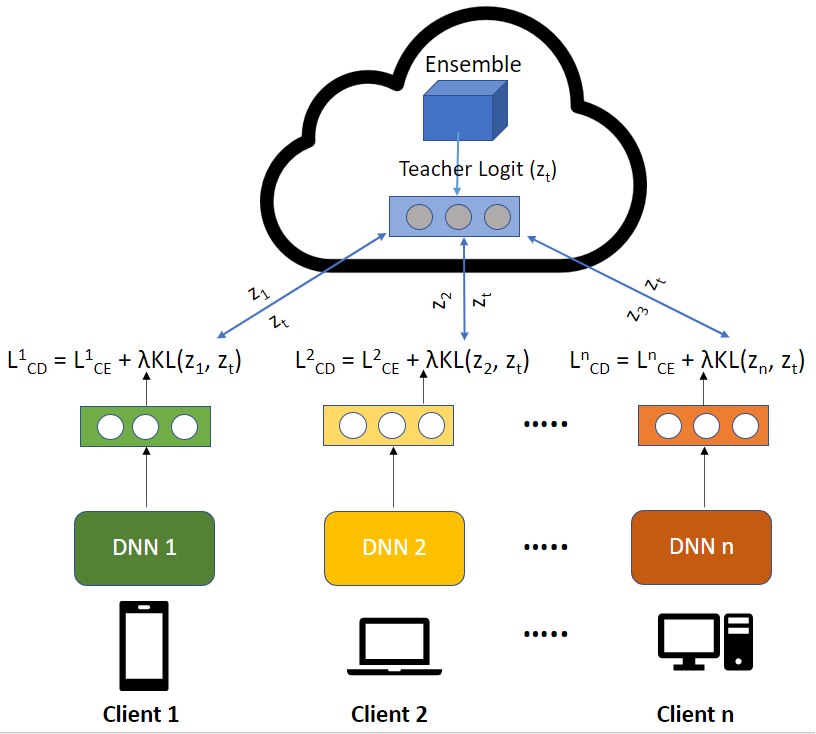

As shown in the figure, each client has its own model and independently trains this model using their private data. The process of training personalized models using FedCD is summarized below;

- Each client designs their own model fitting to their needs based on training data and computing resources and performs local training using their training data.

- After a few epochs of local training, each client uploads their logits to the parameter server.

- The parameter server receives logits from the clients and fuses them together to generate teacher logits in order to combine the local knowledge into a teacher representation.

- Clients update their local models after receiving the teacher logits from the parameter server using knowledge distillation.

The training continues in this interactive fashion until convergence. FedCD improves the performance of FL in the presence of model and data heterogeneity. The framework allows clients with different network and computation capabilities to design their own unique models and benefit from the knowledge shared by the rest of the clients. FedCD outperforms the classical FedAvg and knowledge distillation-based approaches in both IID and non-IID settings.