Förderjahr 2023 / Stipendien Call #18 / ProjektID: 6885 / Projekt: Increasing Trustworthiness of Edge AI by Adding Uncertainty Estimation to Object Detection

Uncertainty estimation helps models to identify difficult inputs, which can be further used for continual learning, enabling models to evolve. Active learning prioritizes valuable data by utilizing uncertainty as a metric, reducing labeling costs.

Adapt the Model Iteratively to New Data through Continual Learning

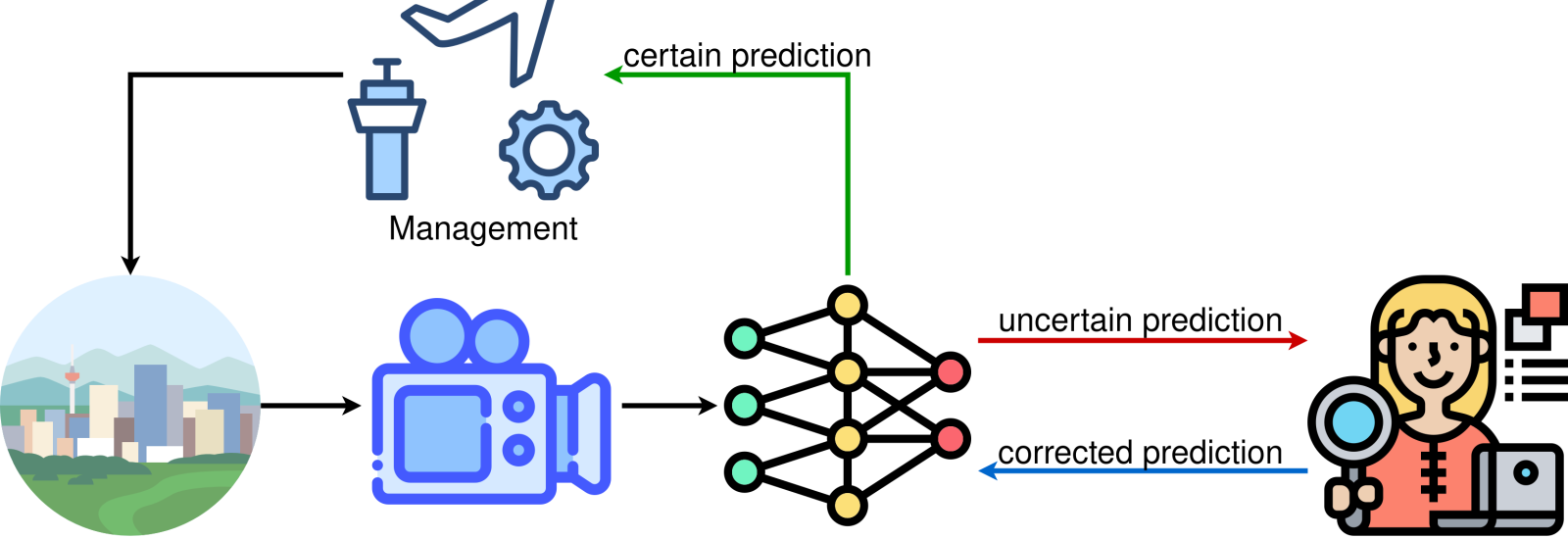

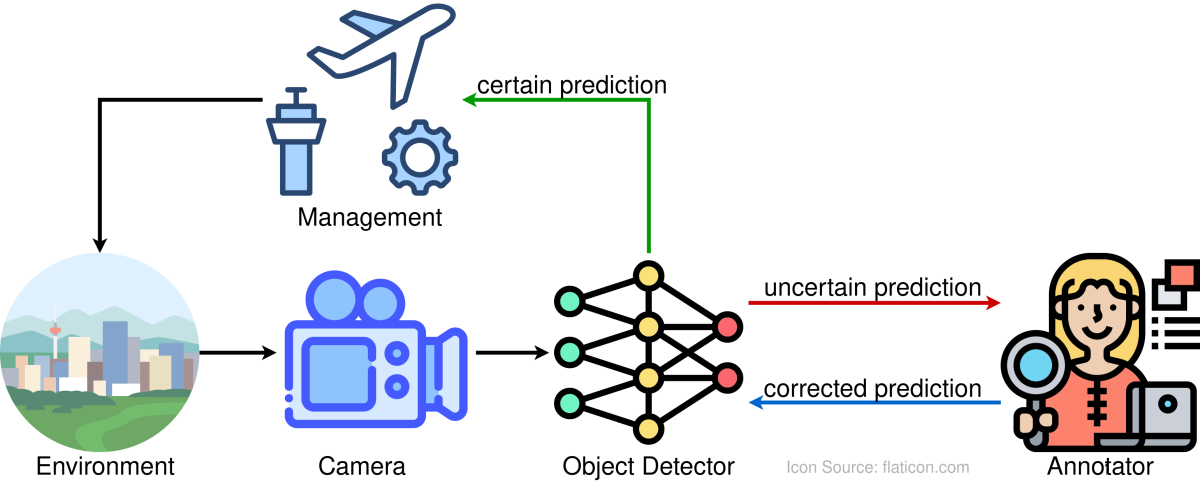

The primary advantage of equipping a model with uncertainty estimation is the ability to identify inputs that the model is unable to process and to prevent the generation of an unconfident prediction. This allows other experts, who can make more confident predictions, to take control. Moreover, there are additional applications for this uncertainty estimation. Instead of just filtering out uncertain inputs, they can be utilized as a data sample to further enhance the model's performance [1]. A desirable ability of models is to be able to adapt to the environment over time through continual learning (CL) [2]. In the context of object detection, the inputs from the camera can be labeled by annotators (such as humans) and subsequently employed to retrain the model, thereby enabling it to predict the input with greater confidence upon its subsequent appearance.

Leveraging Active Learning through Uncertainty Estimation

The primary challenge associated with CL is its considerable time requirement, as the manual labeling/annotation process is expensive. It is therefore crucial to carefully select images for annotation, considering the extent of novel information they convey. This is where active learning (AL) becomes relevant, specifically in the context of uncertainty-driven active learning [3]. AL is a methodology that aims to enhance the model's performance over time by focusing on inputs that provide a high amount of new information. Uncertainty estimation is an effective method for this purpose, as high-uncertain input images provide valuable information that can be used to enhance the model.

Dealing with Catastrophic Forgetting

Another challenge of CL is catastrophic forgetting, which occurs when the model adapts to new training samples but then forgets previous knowledge. If this issue is not addressed during training, the model's overall performance may decline despite continuously learning from new samples. Several optional are available [3]:

- Regularization-based approaches add constraints to prevent the model from drastically altering weights critical for previously learned tasks.

- Replay-based approaches involve storing and replaying a small subset of past data during the training process to help the model retain old knowledge.

- Optimization-based approaches focus on utilizing specialized techniques during training, such as gradient projection.

- Representation-based approaches aim to learn robust feature representations that are transferable across different tasks.

- Architecture-based approaches modify the model architecture to grow with new tasks, having per-task parameters.

Choosing the right approach depends on the specific use-case, available computational resources, and privacy constraints. Replay-based methods are simple to implement, but do not suit all scenarios. For instance, in some privacy-sensitive environments, storing past data for replay might not be feasible, which would make architecture-based or optimization-based approaches more appropriate.

Conclusion

Uncertainty estimation is not just a safeguard against unconfident predictions, it’s a crucial component for long-term model improvement, driving continual and active learning in machine learning systems.

References

[1] Nguyen, T., Nguyen, K., Nguyen, T., Nguyen, T., Nguyen, A., & Kim, K. (2023, December). Hierarchical Uncertainty Aggregation and Emphasis Loss for Active Learning in Object Detection. In 2023 IEEE International Conference on Big Data (BigData) (pp. 5311-5320). IEEE.

[2] Hu, Q., Ji, L., Wang, Y., Zhao, S., & Lin, Z. (2024). Uncertainty-driven active developmental learning. Pattern Recognition, 151, 110384.

[3] Wang, L., Zhang, X., Su, H., & Zhu, J. (2024). A comprehensive survey of continual learning: theory, method and application. IEEE Transactions on Pattern Analysis and Machine Intelligence.