Förderjahr 2023 / Stipendien Call #18 / ProjektID: 6885 / Projekt: Increasing Trustworthiness of Edge AI by Adding Uncertainty Estimation to Object Detection

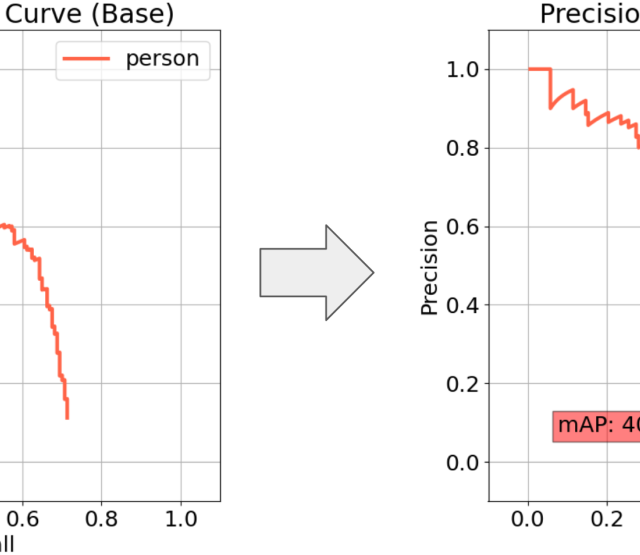

The current expirimental setup will be reworked from its foundation by switching from the SSD model to the latest YOLO11 object detector.

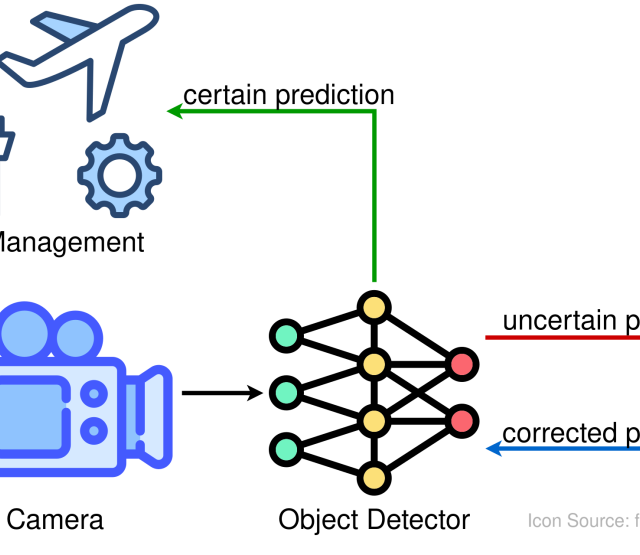

YOLO11 [2] is the latest iteration of a popular series of object detectors. Here, we will explore two approaches of adding uncertainty estimation to YOLO11, paying close attention not only to its mean Average Precision (mAP) score but also to the computational overhead of the uncertainty estimation. Regarding previous experiments and insights that led to the current state, feel free to check out the previous blog.

Evaluation Dataset

To evaluate uncertainty estimation, a common approach is to test the model on a dataset with a different distribution of input images than it has been trained on. Cityscapes [1] is a dataset featuring images of city traffic from the perspective of a driving car, showing pedestrians and vehicles (cars, trains, bicycles, etc.). Foggy-Cityscapes [5] is a modified version of Cityscapes, containing the same images, but with an artificial fog weather condition added to them. In the following evaluation, Cityscapes will be the training dataset and the Foggy-Cityscapes will serve as a test dataset, challenging the models to deal with an uncertain input, a weather condition they have not seen before.

Implemented Models

The following implementations are all based on a YOLO11 detector, pre-trained on the MS COCO dataset [3]. Transfer learning will be applied to further train them on Cityscapes, before being finally evaluated on the Foggy-Cityscapes dataset.

- Base: The YOLO11 base model without any uncertainty estimation.

- EDL MEH: Uncertainty Estimation implemented according to Park et al.[4].

- MC-Dropout: A Dropout-based implementation similar to [6].

In a previous blog post about metrics we describe how we incorporate uncertainty estimation in common object detection metrics in more detail. Here, the evaluation metrics are:

- Precision: Measuring how many of the predictions the model made turned out to be correct. False Positives have a strong negative impact on this metric.

- Recall: How good the model is at retrieving all objects. False negatives (objects that were not found) reduce this metric strongly.

- mAP50: Mean Average Precision requiring at least 50% overlap in the area between the predicted and the actual object bounding box.

- mAP50-95: The averaged mean Average Precision tested on various thresholds for overlap (50% to 95%). Provides additional insights to the regular mAP50 metric.

- FPS: Frames per second, how fast the model can process images. Higher is better, especially for real-time edge systems.

Evaluation

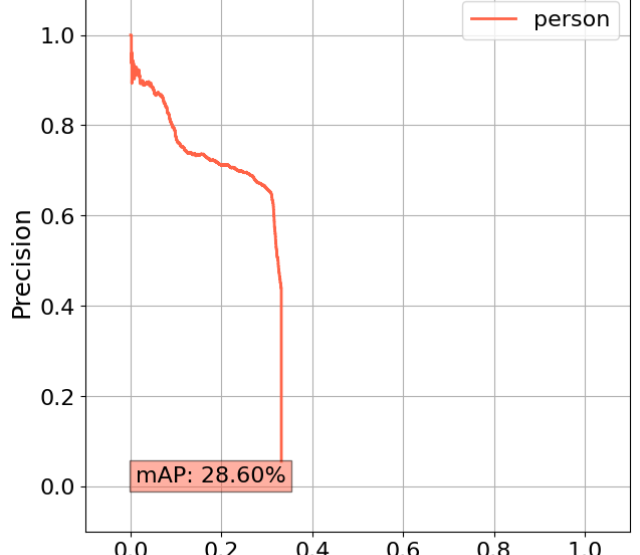

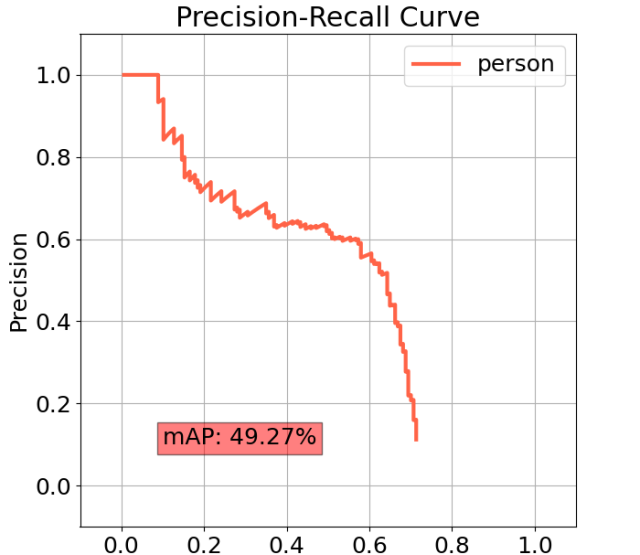

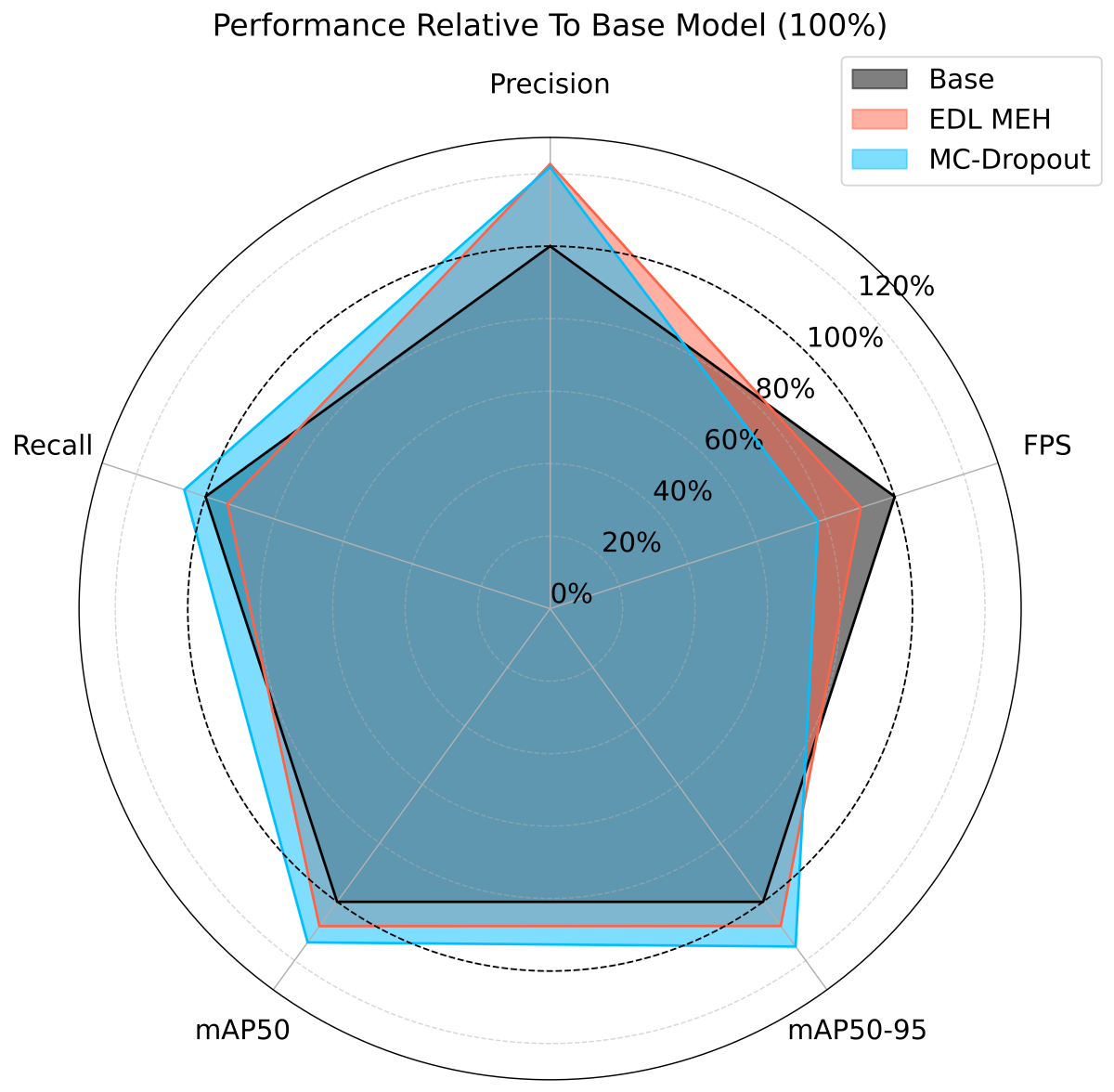

The polar chart below shows all three models with respect to the five metrics. Note that all metric values have been normalized to the base model (black), which represents 100% in every aspect.

As seen in the chart, both uncertainty estimation approaches were able to outperform the baseline in precision (+20%). However, they also suffered from reduced FPS, with the MC-Dropout version having around -20% and the EDL MEH version about -10% lower FPS. This highlights the trade-off uncertainty estimation always has to balance. The other metrics are all close to the baseline, with MC-Dropout having a slight advantage over EDL MEH in general.

Summary

The recent results with the YOLO detector confirm what has already been shown for the previously used SSD detector, uncertainty estimation helps to reduce false positives. However, there is marginal to no change in other metrics (recall), meaning uncertainty estimation does not improve the object detector overall (e.g., finding all objects in an image). Still, the predictions the model does make will gain more trustworthiness in the process. Additionally, the mean Average Precision of the baseline can now be maintained, mostly due to the utilization of transfer learning and more robust uncertainty estimation approaches. Further experiments will be needed to strengthen these findings, e.g., by using additional metrics and datasets. Moreover, the trade-off between detection speed and precision will be explored in more detail.

References

[1] Marius Cordts, Mohamed Omran, Sebastian Ramos, Timo Rehfeld, Markus Enzweiler, Rodrigo Benenson, Uwe Franke, Stefan Roth, and Bernt Schiele. The Cityscapes Dataset for Semantic Urban Scene Understanding. pages 3213–3223, 2016.

[2] Glenn Jocher and Jing Qiu. Ultralytics YOLO11, 2024. tex.orcid: 0000-0001-5950-6979, 0000-0002-7603-6750, 0000-0003-3783-7069.

[3] Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Doll´ar, and C. Lawrence Zitnick. Microsoft COCO: Common objects in context. In David Fleet, Tomas Pajdla, Bernt Schiele, and Tinne Tuytelaars, editors, Computer vision – ECCV 2014, pages 740–755, Cham, 2014. Springer International Publishing.

[4] Younghyun Park, Wonjeong Choi, Soyeong Kim, Dong-Jun Han, and Jaekyun Moon. Active learning for object detection with evidential deep learning and hierarchical uncertainty aggregation. In The eleventh international conference on learning representations, 2023.

[5] Christos Sakaridis, Dengxin Dai, and Luc Van Gool. Semantic Foggy Scene Understanding with Synthetic Data. International Journal of Computer Vision, 126(9):973–992, September 2018.

[6] Sai Harsha Yelleni, Deepshikha Kumari, Srijith P.K., and Krishna Mohan C. Monte Carlo DropBlock for modeling uncertainty in object detection. Pattern Recognition, 146:110003, February 2024.